Too little, too late: By the time harmful content posted to Facebook during the Capitol riot period came down, millions had already engaged

In the afternoon of January 6, 2021, Facebook leadership announced they were “appalled by the violence at the Capitol today,” and were approaching the situation “as an emergency.”

Jan 6, 2023

In the afternoon of January 6, 2021, Facebook leadership announced they were “appalled by the violence at the Capitol today,” and were approaching the situation “as an emergency.” Facebook staff were searching for and taking down problematic content, such as posts praising the storming of the U.S. Capitol, calls to bring weapons to protests, and encouragement about the day’s events. At the same time, Facebook announced it would block then-President Donald Trump’s account – a decision the company is now, two years later, considering reversing.

How effective was Facebook’s approach? Not very, concludes a new, comprehensive analysis of more than 2 million posts from researchers at NYU Cybersecurity for Democracy. The researchers identified 10,811 posts removed from 2,551 U.S. news sources. Among their major findings:

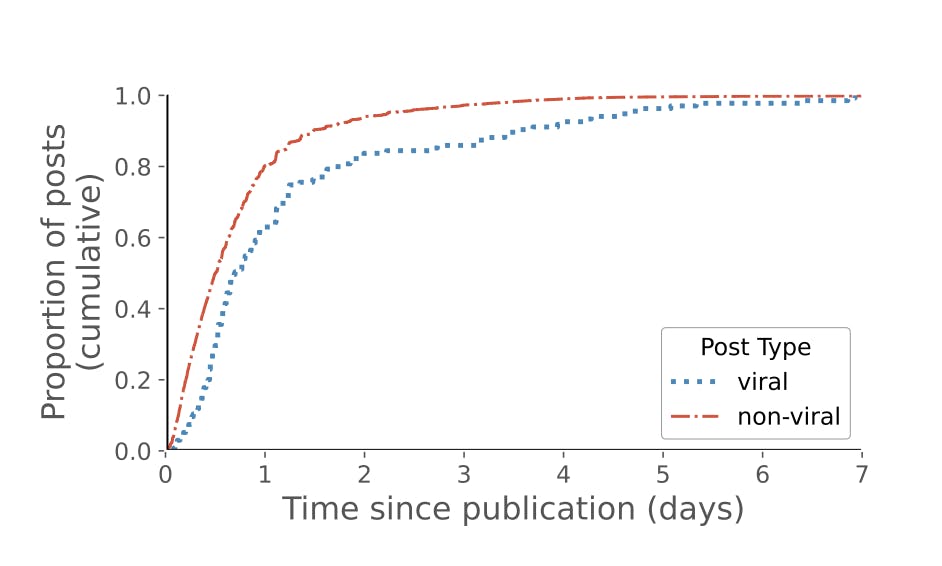

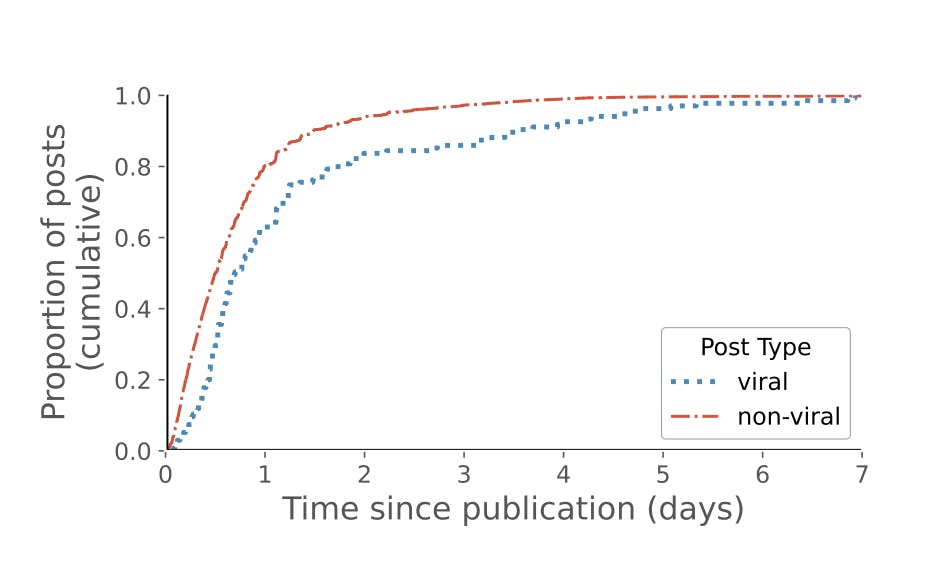

- Nearly 80 percent of potential engagement with posts eventually removed happened before they were down. This is because posts tend to get the most engagement – people commenting, “liking,” or otherwise interacting with information – soon after posting. The researchers estimated that despite the quick intervention, only 21% of predicted engagement was prevented.

- Nearly a week after the attack, older posts published began coming down. This however disrupted less than one percent of predicted future engagement, because by then, nearly all the people who were going to engage with this content had already done so.

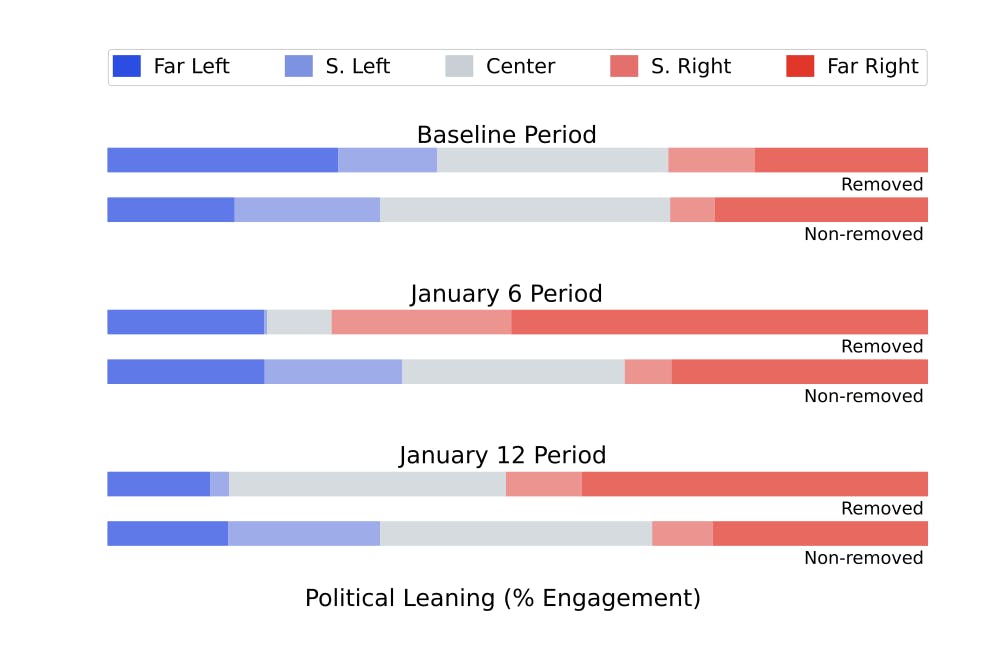

- Facebook was more likely to remove posts by news sources known to spread misinformation, such as “Dan Bongino” on the right and “Occupy Democrats” on the left. During the period of the January 6 attacks and after, Facebook removed more content from sites classified as “far right” and “slightly right.”

The researchers could not confirm the specific reasons individual pieces of content came down – whether they were removed by Facebook for violations or by the posters themselves. However, they confirmed that nearly all of the removed content did not match the pattern for voluntary removals. Furthermore, given the company’s public statements, it is likely that the posts removed violated stated standards. C4D researchers conclude that takedowns are not the most effective way to stem damage from misinformation. They recommend:

- Decreasing delays in human review and creating policies to nimbly react to major real-world events.

- Changing content recommendation algorithms to slow down engagement with posts, giving Facebook content moderators more time to intervene effectively.

- Creating procedures for manual review of posts that show signs of “going viral.”

Engagement with removed and non-removed posts from U.S. news pages by partisanship for the baseline, January 6, and January 12 periods, respectively. Around January 6, the vast majority of engagement with posts that were ultimately removed went to posts from sources classified as Slightly Right and Far Right.

Methodology

The data set used by C4D researchers for this analysis consists of public Facebook posts of 2,551 U.S. news publishers during and after the 2020 U.S. presidential election. This data set included third-party data provided by the news rating organizations NewsGuard and Media Bias/Fact Check. Based on the assessments by these organizations, the authors derived the political leaning of each news publisher (far left to far right) as well as a binary “misinformation” attribute indicating whether a news publisher had a known history of spreading misinformation.

The researchers collected all public Facebook posts and corresponding engagement metadata from the 2,551 U.S. news publishers by crawling the CrowdTangle API, covering dates from August 10, 2020 until January 18, 2021.

For the current analysis, C4D researchers estimate how much more user engagement a post might have accrued had it not been deleted. Because the accrual of engagement is different for each post, but potentially more similar among posts from the same publisher, they estimate a post’s engagement potential based on non-deleted posts from the same Facebook page. To validate their estimates of engagement predictions, they tested their methodology on a sample of 10,000 non-deleted posts published during the same time period.

Days until non-removed baseline posts reach 80 % of their lifetime engagement. Around 78% of non-viral posts reach 80% of their lifetime engagement within one day, whereas it takes two days for viral posts.

Footnotes:

[1] Laura Edelson, Minh-Kha Nguyen, Ian Goldstein, Oana Goga, Damon McCoy, and Tobias Lauinger. 2021. Understanding engagement with U.S. (Mis)information news sources on Facebook. In Internet Measurement Conference (IMC). ACM. https://doi.org/10.1145/3487552.3487859

Full paper: