Summary of findings: Conspiracy brokers - understanding the monetization of YouTube conspiracy theories

In this first-of-its-kind study, researchers reviewed advertising traffic on YouTube to better understand how conspiracy content is monetized. By evaluating ads that ran on YouTube channels which host conspiracy content, the team found these YouTube channels hosting 11 times the prevalence of predatory or deceptive ads when compared to mainstream YouTube channels, serving as fertile grounds for ample predatory advertising.

YouTube is a popular gateway for conspiracy theories — from QAnon to the belief that the earth is flat — and also provides ample opportunities for publishers of these theories to finance their work. In a first-of-its-kind study, researchers at New York University looked at advertising on YouTube channels that run conspiracy content and found:

- Conspiracy channels had nearly 11 times the prevalence of likely predatory or deceptive ads when compared to mainstream YouTube channels.

- They contained just 16 percent of the ad volume of mainstream channels, suggesting the conspiracy channels may have been “demonetized,” or excluded from advertising because their content is deemed unfriendly to advertisers.

Conspiracy channels were twice as likely to feature non-advertising ways to monetize content, such as donation links for Patreon, GoFundMe and PayPal. For more on this aspect, see this study by researchers at Cornell Tech. The study, Conspiracy Brokers: Understanding the Monetization of YouTube Conspiracy Theories, was led by NYU’s Cybersecurity for Democracy project and its co-director Damon McCoy. It suggests a YouTube ecosystem that offers predatory advertisers ready access to viewers of conspiracy content, as publishers take advantage of options for monetizing that content.

In conspiracy channels on YouTube, researchers found that:

- Certain scams were more common. Self-improvement ads, many of them get-rich-quick schemes, were seen more frequently vs. mainstream content. So were lifestyle, health and insurance ads — including two advertisers unique to conspiracy channels that were generating leads for insurance scammers. Ads promoting questionable products were also common, such as a supplement that claimed to cure Type 2 diabetes.

- Affiliate marketing was a constant. Among those marketing low-quality products, for example, almost 95 percent used some form of affiliate marketing.

- Videos with ads got far more views. In the conspiracy channels, monetized videos had almost four times as many views as demonetized ones. Since YouTube’s business model relies on advertising, this may be because its recommender algorithm prioritizes videos that contain ads.

- Content pointed to alternative social media sites. Sites like Gab, Parler and Telegram were mentioned more commonly in conspiracy channels than in mainstream ones; Facebook and Twitter were also frequently referenced.

Predatory ads C4D research revealed:

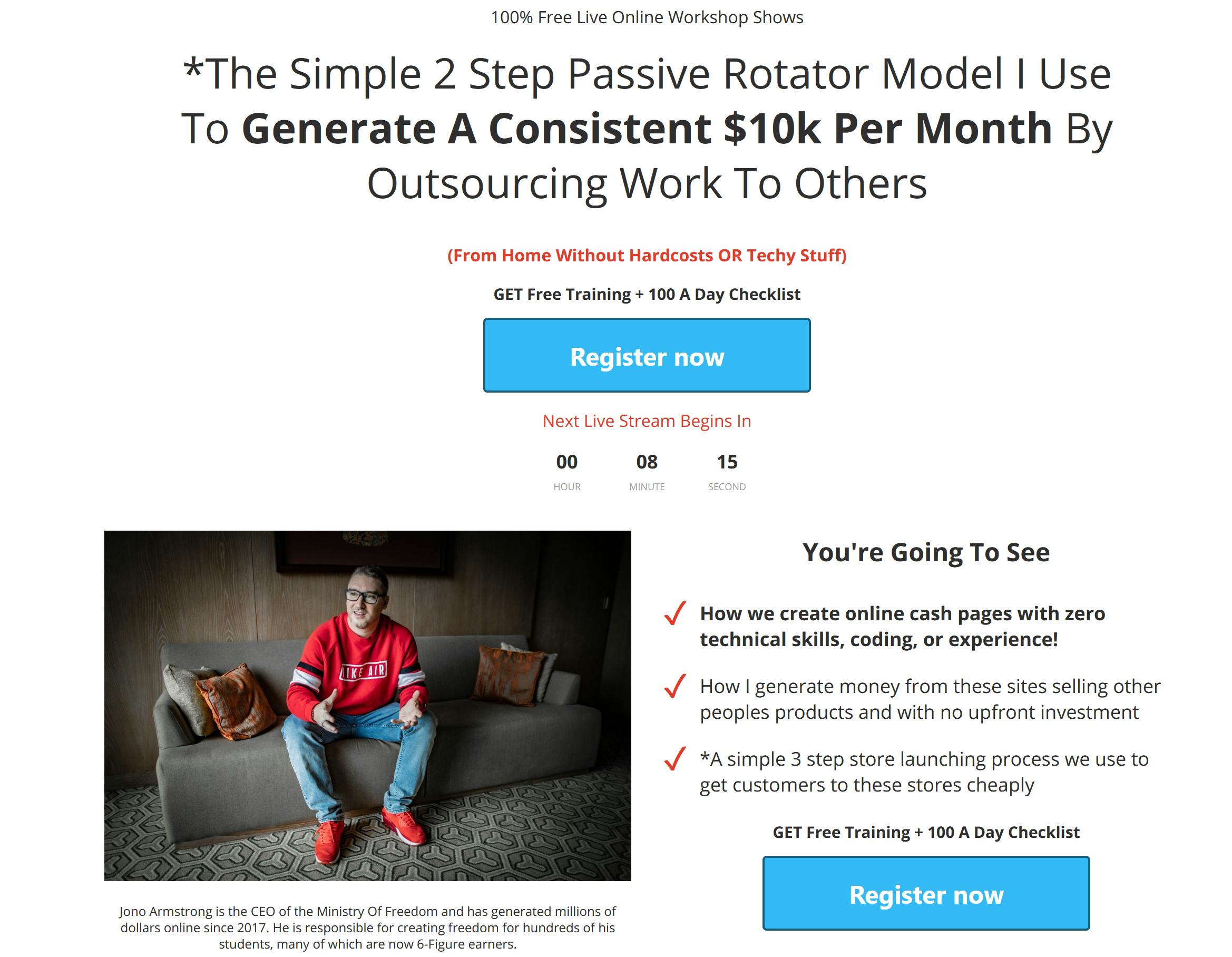

Predatory advertisers take advantage of consumer vulnerabilities to manipulate them into unfavorable market transactions. Figure 1 and Figure 2 showcase this below:

Figure 1: Ads for biblical-secret.com market a book of essential oil recipes that promise near-immortality and target older viewers of conspiracy content.

Figure 2: The most prolific of the scam work-from-home ads were for Ministry of Freedom and linked to over a dozen domains, including ministry-of-freedom.com and freedomministry.com. In March 2021, the FTC banned schemes like these.

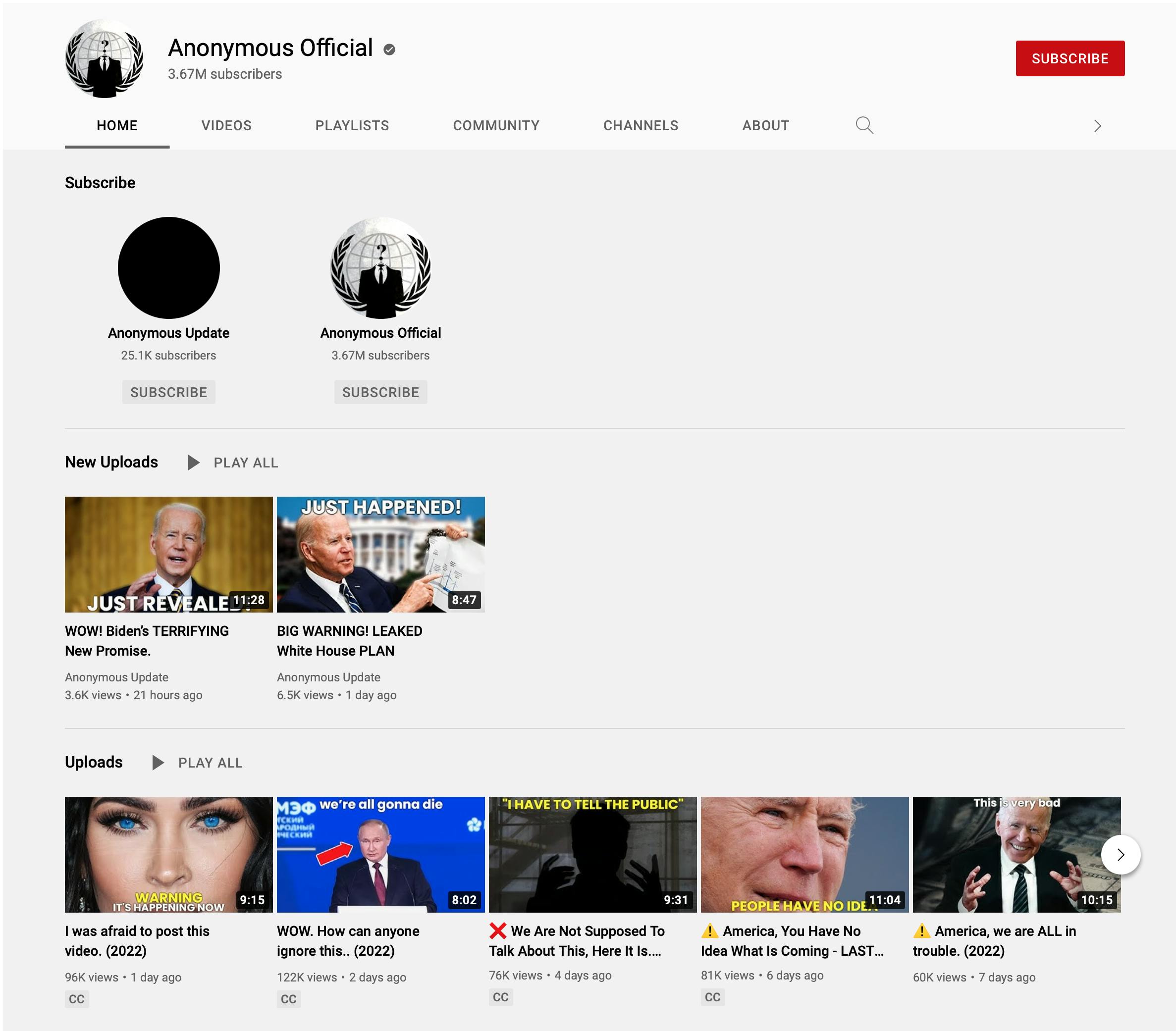

Conspiracy channels

A conspiracy theory is defined as “a belief that some covert but influential organization is responsible for a circumstance or event” (Oxford English Dictionary). Per the authors, conspiracies on YouTube “are dynamic and greatly varied, adapt quickly to current events, and include topics such as the QAnon conspiracy, New World Order, Galactic Federation, vaccine skepticism, COVID denial, Jeffrey Epstein’s suicide, biblical predictions, higher consciousness, aliens, UFOs, and the deep state.” The site shown below on Figure 3 is the most popular of the conspiracy channels C4D researchers monitored. It claims to be the “official” Anonymous channel on YouTube.

Figure 3: Anonymous “Official” YouTube conspiracy channel

Recommendations

Given the diverse ways conspiracy publishers finance their content, cross-platform moderation and greater transparency into YouTube’s targeting and ad delivery practices are needed to better protect consumers from predatory advertising and other harmful content.

Study methodology

Researchers reviewed YouTube channels that were flagged for conspiracy content by two different external studies, and “mainstream” channels as well, for 4.5 months. They observed three types of ads:

- Video ads that run before a YouTube video (preroll ads).

- Simpler text and image banner ads that show up while a video is running.

Sidebar video ads were not reviewed since they tend to be from the same source as the preroll ads. The goal of the study was to better understand how conspiracy publishers finance their work, which is a major contributor to the epidemic of online mis- and disinformation.

The New York University-based team of authors includes: Cameron Ballard, Ian Goldstein, Pulak Mehta, Genesis Smothers, Kejsi Take, Victoria Zhong, Rachel Greenstadt, Tobias Lauinger, and Damon McCoy.

About Cybersecurity for Democracy

Cybersecurity for Democracy is a research-based, nonpartisan, and independent effort to expose online threats to our social fabric – and recommend how to counter them. It is a part of the Center for Cybersecurity at the NYU Tandon School of Engineering.